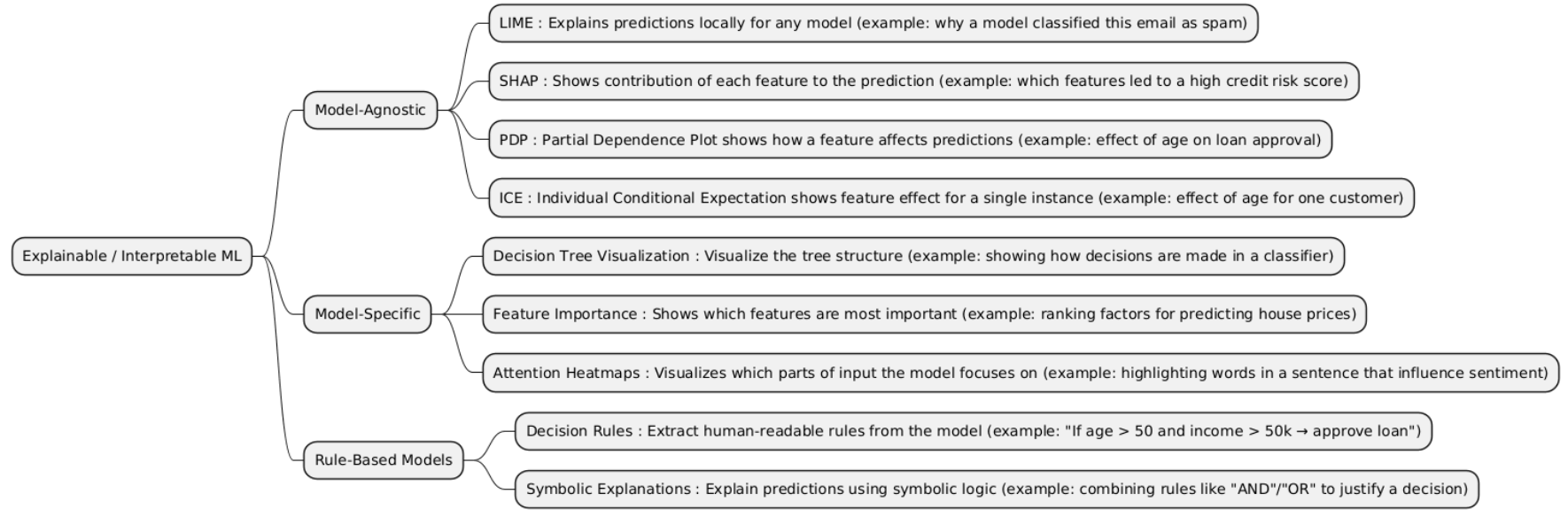

Explainable (or Interpretable) Machine Learning focuses on making machine learning models transparent and understandable. It helps users and developers understand how a model makes decisions, identify biases, and build trust, especially in critical applications like healthcare and finance.

| Type | What it is | When it is used | When it is preferred over other types | When it is not recommended | Examples of projects that is better use it incide him |

|---|---|---|---|---|---|

| Model-Agnostic | Model-Agnostic Explainability methods (like LIME, SHAP, and Partial Dependence Plots (PDP)) are tools that explain any machine learning model, regardless of its internal structure. They analyze how input features influence predictions by observing the model’s behavior externally (as a black box). | Used when you need to understand or justify predictions from complex or opaque models (e.g., neural networks, ensemble models) and cannot access their internal parameters. |

• Better than Model-Specific methods when the model is non-transparent (like deep learning or gradient boosting). • Ideal when you need a universal explanation framework that works across different model types. • Useful in regulated industries (finance, healthcare) where interpretability is required. |

• Not ideal for real-time systems (they can be computationally expensive). • Avoid when you already have inherently interpretable models (like decision trees or rule-based systems). • Can produce approximate or unstable explanations for highly nonlinear models. |

• Credit risk scoring system: Using SHAP to explain why a customer was denied a loan. • Medical diagnosis AI: Using LIME to highlight which symptoms influenced a prediction. • Customer churn prediction: Using PDP to visualize how customer age or activity affects churn probability. |

| Model-Specific | Model-Specific Explainability methods are techniques built into certain models to make them inherently interpretable. Examples: • Decision Trees: show decisions as paths. • Attention Mechanisms: reveal what parts of input the model focuses on. • Rule-Based Models: express logic in human-readable rules. |

Used when you want direct, built-in interpretability from the model itself — not just post-hoc explanations. Perfect for understanding how a specific model works internally. |

• Better than Model-Agnostic when you design the model yourself and want true interpretability, not approximations. • Ideal when the goal is trust + transparency (like in healthcare, law, or finance). • Great when the model itself is simple or structured, e.g., decision trees or models with attention maps. |

• Not suitable for very complex data (images, audio, text) where interpretable models can’t match performance of black-box models. • Avoid when you need maximum accuracy over interpretability. • Less useful when you’re using ensemble or deep models that don’t have clear internal reasoning. |

• Medical diagnosis system using Decision Trees to show clear rules behind each diagnosis. • Machine translation model using Attention Maps to visualize which source words influenced each translation. • Fraud detection using Rule-Based Models to explain why a transaction was flagged. |

# Install SHAP if not installed

# !pip install shap scikit-learn

import shap

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

# --- Load dataset ---

X, y = load_iris(return_X_y=True)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

# --- Train a model ---

model = RandomForestClassifier(random_state=42)

model.fit(X_train, y_train)

# --- Explain predictions with SHAP ---

explainer = shap.Explainer(model, X_train) # model-agnostic

shap_values = explainer(X_test)

# --- Visualize SHAP values for first sample ---

shap.plots.waterfall(shap_values[0])

# --- Optional: summary plot for all test samples ---

shap.plots.beeswarm(shap_values)

# Install LIME if not installed

# !pip install lime scikit-learn

import lime

import lime.lime_tabular

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

# --- Load dataset ---

X, y = load_iris(return_X_y=True)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

feature_names = load_iris().feature_names

class_names = load_iris().target_names

# --- Train a model ---

model = RandomForestClassifier(random_state=42)

model.fit(X_train, y_train)

# --- Create LIME explainer ---

explainer = lime.lime_tabular.LimeTabularExplainer(

training_data=X_train,

feature_names=feature_names,

class_names=class_names,

mode='classification'

)

# --- Explain a single prediction ---

i = 0 # index of test sample

exp = explainer.explain_instance(X_test[i], model.predict_proba, num_features=4)

# --- Show explanation ---

exp.show_in_notebook(show_table=True) # for Jupyter Notebook

# or for console:

print(exp.as_list())

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

import pandas as pd

# --- Load dataset ---

X, y = load_iris(return_X_y=True)

feature_names = load_iris().feature_names

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

# --- Train a model ---

model = RandomForestClassifier(random_state=42)

model.fit(X_train, y_train)

# --- Get feature importance ---

importances = model.feature_importances_

# --- Display in a table ---

feature_importance_df = pd.DataFrame({

'Feature': feature_names,

'Importance': importances

}).sort_values(by='Importance', ascending=False)

print(feature_importance_df)